How to Trust a Robot

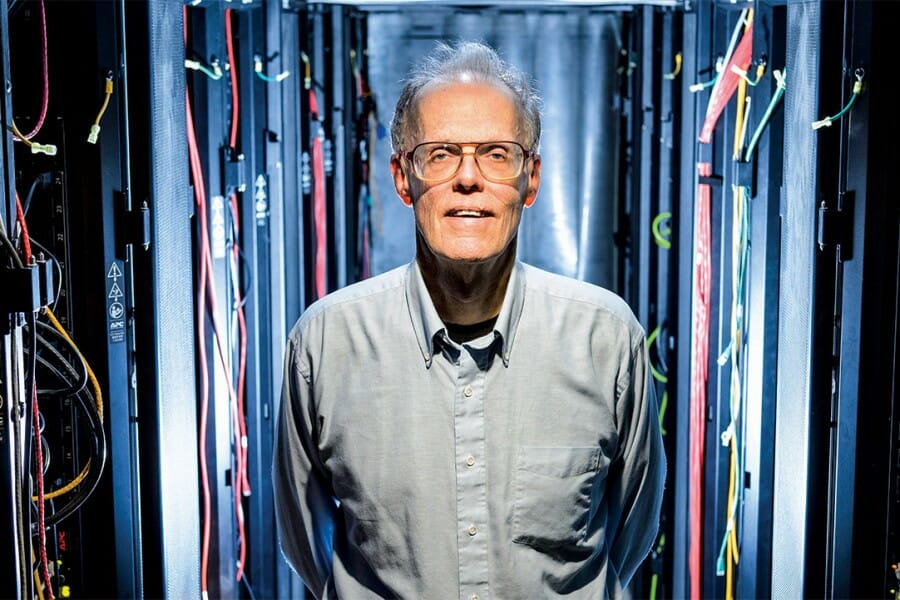

UW expert Bill Hibbard is part of a growing national conversation on AI ethics.

Transparent is a word that is perhaps best understood by its opposite: opacity, secrecy, murkiness, mystery. The inability to see inside of something can provoke uncertainty, or fear, or hatred.

What’s behind that closed door?

What’s inside the black box?

A nontransparent thing can take hold of us and become the dark void under the bed of our imagination, where all the worst monsters hide — and in today’s world, those monsters are often robotic.

This sort of dark, emotional underpinning seems to inform the most popular depictions of artificial intelligence in American culture today. Movies such as The Terminator or Blade Runner or Ex Machina present a future in which artificial intelligence (AI) makes life inevitably bleak and violent, with humans pitted against machines in conflicts for survival that bring devastating results.

But if the fear of AI is rooted in the idea of it as something unknown and uncontrollable, then perhaps it’s time to shine a collective flashlight on Silicon Valley. And that’s exactly what UW emeritus senior scientist Bill Hibbard ’70, MS’73, PhD’95 aims to do.

A singular voice

Hibbard’s story has a few Hollywood angles of its own. He’s overcome a difficult childhood and an addiction to drugs and alcohol that thwarted his career for almost a decade after college. In 1978, sober and ready for a reboot, Hibbard joined the UW–Madison Space Science and Engineering Center (SSEC) under the late Professor Verner Suomi, who oversaw the development of some of the world’s first weather satellites. By the 1970s, the SSEC was producing advanced visualization software, and Hibbard was deeply involved in many of the center’s biggest and most complex projects for the next 26 years.

But satellites were ultimately a detour from Hibbard’s real intellectual passion: the rise of AI. “I’ve been interested in computers since I was a kid and AI since the mid-’60s,” he says. “I’ve always had a sense that it’s a very important thing that’s going to have a huge impact on the world.”

Many Americans are already applying artificial intelligence to their everyday lives, in the form of innovations such as Apple’s personal assistant, Siri; Amazon’s purchase recommendations based on customers’ interests; and smart devices that regulate heating and cooling in homes. But it’s strong AI — defined as the point when machines achieve human-level consciousness — that has some experts asking difficult questions about the ethical future of the technology.

In the last decade, Hibbard has become a vocal advocate for better dialogues about (and government oversight of) the tech giants that are rapidly developing AI capabilities away from public view. In 2002, Hibbard published Super-Intelligent Machines, which outlines some of the science behind machine intelligence and wrestles with philosophical questions and predictions about how society will (or won’t) adapt as our brains are increasingly boosted by computers. Hibbard retired from the SSEC two years later and devoted himself full time to writing and speaking about AI technology and ethics, work that has earned him invitations to various conferences, committees, and panels, including The Future Society’s AI Initiative at Harvard Kennedy School.

“Bill has a strong sense of ethics, which, coupled with his programming expertise, made him uniquely aware of blind spots that others working in ethics of AI don’t necessarily emphasize,” says Cyrus Hodes, director and cofounder of the Harvard initiative.

WHAT IS THE SINGULARITY?

Definitions vary, but one dictionary describes the term as “a hypothetical moment in time when artificial intelligence and other technologies have become so advanced that humanity undergoes a dramatic and irreversible change.” Or, in lay terms, we reach the singularity when machines become smarter than us. When does Bill Hibbard think we’ll get to the singularity, if ever? “I defer to Ray Kurzweil,” he says, referring to the author, futurist, and Google engineer. “We get to human-level intelligence by 2029, and we get the singularity by 2045.”

From transparency to trust

Most of the recent media coverage of AI ethics has focused on the opinions of celebrity billionaire entrepreneurs such as Elon Musk and Mark Zuckerberg, who debate whether robots will cause World War III (Musk’s position) or simply make our everyday lives more convenient (Zuckerberg’s). The debate generates headlines, but critics say it also centers the conversation on the Silicon Valley elite.

Similarly, says Molly Steenson ’94, an associate professor at the Carnegie Mellon School of Design, we’re distracted from more practical issues by too much buzz around the singularity (the belief that one day soon, computers will become sentient enough to supersede human intelligence).

“When I look at who’s pushing the idea [of the singularity], they have a lot of money to make from it,” says Steenson, who is the author of Architectural Intelligence: How Designers and Architects Created the Digital Landscape. “And if that’s what we all believe is going to happen, then it’s easier to worship the [technology-maker] instead of thinking rationally about what role we do and don’t want these technologies to play.”

But for Hibbard, the dystopian scenarios can serve a purpose: to raise public interest in more robust and democratic discussions about the future of AI. “I think it’s necessary for AI to be a political issue,” he says. “If AI is solely a matter for the tech elites and everyone else is on the sidelines and not engaged, then the outcome is going to be very bad. The public needs to be engaged and informed. I advocate for public education and control over AI.”

Tech-industry regulation is a highly controversial stance in AI circles today, but Hibbard’s peers appreciate the nuances of his perspective. “Bill has been an inspiring voice in the field of AI ethics, in part because he is a rare voice who takes artificial superintelligence seriously, and then goes on to make logical, rational arguments as to why superintelligence is likely to be a good thing for humanity,” says Ben Goertzel, CEO of SingularityNET, who is also chief scientist at Hanson Robotics and chair of the Artificial General Intelligence Society. “His reasoned and incisive writings on the topic have cut through a lot of the paranoia circling around the topic of superintelligence.”

Hibbard’s background as a scientist has helped him to build the technical credibility necessary to talk frankly with AI researchers such as Goertzel and many others about the societal issues of the field. “[Hibbard’s] clear understanding and expression of the acute need for transparency in AI and its applications have also been influential in the [AI] community,” Goertzel says. “He has tied together issues of the ethics of future superintelligence with issues regarding current handling of personal data by large corporations.” And Hibbard has made this connection in a way that makes it clear how important transparency is, Goertzel says, for managing AI now and in the future, as it becomes massively more intelligent.

Designing more democratic technologies

Like Hibbard, Steenson’s career in AI has its roots at the UW. In 1994, she was a German major studying in Memorial Library when fellow student Roger Caplan x’95 interrupted to badger her into enrolling in a brand-new multimedia and web-design class taught by journalism professor Lewis Friedland. Caplan, who is now the lead mobile engineer at the New York Times, promised Steenson that learning HTML would be “easy,” and she was intrigued enough to sign up. The class sparked what would become her lifelong passion for digital design and development, and Steenson went on to work for Reuters, Netscape, and a variety of other digital startups in the early days of the web.

In 2013, Steenson launched her academic career alongside Friedland on the faculty of the UW School of Journalism and Mass Communication before eventually joining Carnegie Mellon. Her scholarship traces the collaborations between AI and architects and designers, and she likes to remind people that the term artificial intelligence dates back to 1955. “Whenever someone is declaring a new era of AI, there’s an agenda,” she says. “It’s not new at all.”

Like Hibbard, Steenson is a strong advocate for broadening AI conversations to include a more diverse cast of voices, and she thinks designers and artists are especially well equipped to contribute. She quotes Japanese engineer Masahiro Mori, who in 1970 coined the term bukimi no tani (later translated as “the uncanny valley”) to describe the phenomenon where people are “creeped out” by robots that resemble humans but don’t seem quite right.

“Mori said we should begin to build an accurate map of the uncanny valley so that we can come to understand what makes us human,” she says. “By building these things that seem like they’re really intelligent, we understand what we are, and that’s something very important that designers and artists and musicians and architects are always doing. We interpret who we are through the things we build. How can we create designs that make us feel more comfortable?”

Bill Hibbard’s Top 10 AI Movies

On Wisconsin asked Hibbard to list his 10 favorite films featuring artificial intelligence.

- A movie I hope someone will make depicting political manipulation using AI on a system resembling Facebook, Google, Amazon, or the Chinese equivalents.

- A.I. Artificial Intelligence (2001), because of the strong emotional connection between AI and a human, which evoked a strong emotion in this viewer.

- Ex Machina (2015), because of the strong emotional connection between AI and a human, used by the AI to manipulate the human.

- π (1998), because of its great depiction of a tortured genius creating AI and his pursuit by people who want to exploit his work. (The movie says only that his creation can be used to predict the stock market, but this is implicitly AI.)

- 2001: A Space Odyssey (1968), because of its insightful story.

- to 10. In no particular order: Her (2013); The Day the Earth Stood Still (1951); Colossus: The Forbin Project (1970); WarGames (1983); and Prometheus (2012).

An ethical education

Many AI futurists believe that ethics is now a critical part of educating the next generation of robotics engineers and programmers.

Transparency is high on the list of pressing issues related to AI development, according to Hodes, who is also vice president of The Future Society. He believes that the most pressing issue as we march toward an Artificial General Intelligence (the point where a machine can perform a task as well as a human) relates to moral principles. It is vital, he says, to start embedding ethics lessons in computer science and robotics education.

UW students are aware of this need. Aubrey Barnard MS’10, PhDx’19, a UW graduate student in biostatistics and medical informatics, leads the Artificial Intelligence Reading Group (AIRG), which brings together graduate students from across campus to discuss the latest issues and ideas in AI. AIRG dates back to 2002, making it the longest-running AI-related student group on campus.

And while members are mostly focused on discussing the technical aspects of AI and machine learning, Barnard says this year they’ve expanded their reading list to include AI history. They’ve also cohosted an ethics discussion about technology with the UW chapter of Effective Altruism, an international charity that raises awareness and funds to address social and environmental issues.

“To me, the cool thing about AI is computers being able to do more than they were programmed to do,” says Barnard, whose work investigates ways to discover causal relationships in biomedical data. “Such a concept seems paradoxical, but it’s not. That’s what got me interested.”

At the undergraduate level, computer science and mathematics student Abhay Venkatesh x’20 has organized Wisconsin AI, a new group that’s already generated enough buzz to get a sponsorship from Google. Venkatesh says the group aims to launch a variety of student-led AI projects, such as using neural networks to experiment with music, images, and facial recognition. As for ethics? “We consider such issues very important when discussing projects, and we’ve actually avoided doing a couple of projects for specifically this reason,” says Venkatesh, who plans to specialize in computer vision. “We’re planning to develop an ethics code soon.”

This sort of burgeoning interest in ethical conversations is exactly what Hibbard hopes to see replicated at the corporate tech-industry level. “All kinds of corporate folks say, ‘Our intentions are good.’ I understand where they’re coming from, but there are all kinds of unintended possibilities,” Hibbard says. “I would worry about any organization that’s not willing to be transparent.”

The light and the dark

Hibbard is emphatic that he is an optimist about AI, and he firmly believes that the benefits of the technology are well worth the challenge of mitigating its risks. “Part of our imperative as human beings is to understand our world, and a big part of what makes us tick is to understand it,” he says. “The whole scientific enterprise is about asking the hard questions. Our world seems so miraculous — is it even possible for us to develop an understanding of it? AI could be a critical tool for helping us do so.”

Yet he also believes that, if left unchecked, AI could become a weapon to repress instead of a tool to enlighten. Unlike what we see in the movies, which usually pit humanity against the machines, Hibbard thinks it’s more plausible that AI could cause conflict between groups of humans, especially if we decide to do things such as implanting computer chips inside of some humans (but not others) to give them faster, more powerful brains or other enhanced attributes. More immediately, though, he warns that significant social disruption could occur if robots continue to displace human jobs at a rapid rate.

“No one really knows exactly what’s going to happen. There’s a degree of disagreement and debate about what’s going on,” he says, adding that nothing is inevitable if we begin to pay attention — and require tech companies to be transparent about what exactly they’re doing and why. “I want the public to know what’s happening, and I want the people developing those systems to be required to disclose.”

Sandra Knisely Barnidge ’09, MA’13 is a freelance writer in Tuscaloosa, Alabama.

Published in the Summer 2018 issue

Comments

Dodie Gimantog June 18, 2025

Such an important topic! Trust in AI starts with transparency and ethics. Glad to see experts like Bill Hibbard leading the conversation.”