A New Age of Technology

Researchers at the UW’s School of Computer, Data & Information Sciences find innovative ways to improve our daily lives.

Stop for a moment and imagine you have a robot butler. How does it know what to do? What must you tell it to make it obey you?

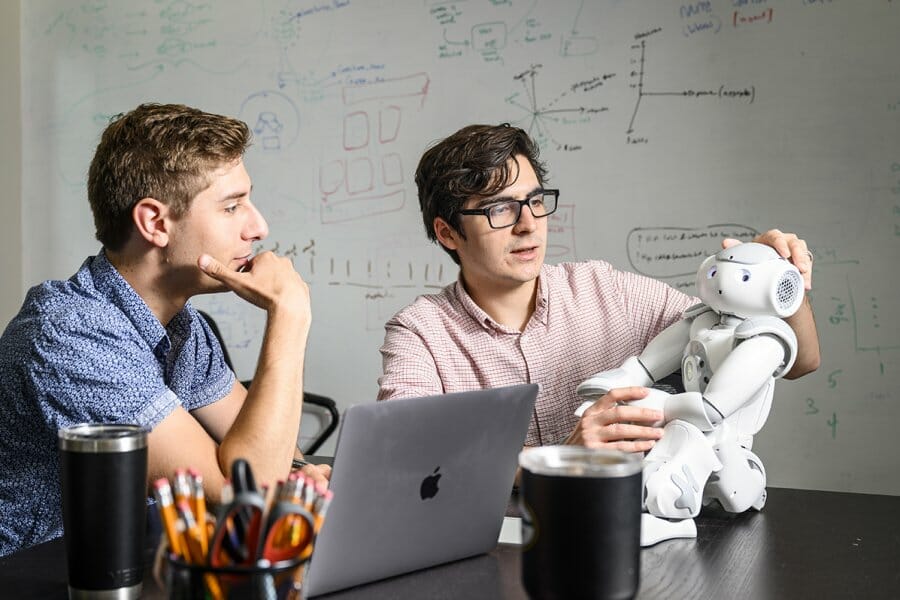

For Bilge Mutlu, these questions are far from fanciful. For the past 12 years, he has directed UW–Madison’s People and Robots Lab.

“Should your robot butler ask whether you want tea or coffee?” asks Mutlu, professor of computer science, psychology, and industrial engineering. “Or should you merely need to say, ‘Just bring my beverage, robot,’ because maybe you’re going to offload the beverage decision to your coffee machine, so the robot doesn’t worry about it?”

To Mutlu, it sometimes seems he has spent his life thinking about mechanical companions. When he was five years old and his family moved to a new neighborhood in Turkey, children wouldn’t play with him. “I made up a story,” he says. “I said, ‘My uncle brought me a robot to play with, so I don’t need to play with you,’ and here I am at age 42 designing robots.”

His life changed in 1999, when he was 23, working in Istanbul as a product designer for the appliance maker Arçelik. He heard the term smart appliances and recalls, “I realized computers were going to play a bigger role in design. I was totally unprepared to handle that, but I knew being ‘smart’ no longer meant designing dials and buttons. Whatever ‘smart’ was going to be, it wasn’t what I was doing.” So he came to the United States and earned his PhD from Carnegie Mellon in human–computer interaction.

Today Mutlu’s goal is developing robotic technologies that seamlessly mesh with people’s lives. “You need a deep understanding of the context of how robots will be used,” he says. “Otherwise they’re not going to succeed. You can build the most amazing robot, but if people don’t want it, they don’t want it, and it’s not a success.”

With the aid of two postdocs and 12 graduate students, Mutlu holds the reins on 10 projects funded by NASA, the National Institutes of Health, and the National Science Foundation. All have the same goal: to make robots as commonplace as cats, dogs, and delivery trucks. His teams are designing robotic manufacturing tools for Boeing, creating a smart display for the senior-citizen interface Elder Tree, teaching a robot how to perform the surprisingly challenging task of opening an oven door, figuring out how to program robots by talking to them, and field-testing Misty, a robot reading buddy that lives, so to speak, with families and has 24 emotions.

“The People and Robots Lab is a very impressive interdisciplinary space for the study of emerging interactive technologies and one of the top labs internationally for exploring human–robot interaction,” says Selma Sabanovic, director of the R-House Laboratory for Human–Robot Interaction at the University of Indiana–Bloomington.

Just as people learned to take for granted TV, the internet, and voice-based computer systems such as Siri and Alexa, Mutlu predicts that in 20 years robotic technologies will have advanced beyond warehouse and delivery uses to the point where people will buy helper and companion robots as routinely as they now purchase laptops or smartphones.

“This thing will roll into your room, say things, and listen to you,” he says. “Things are about to change in very big ways.”

Mutlu once worked alone. But as a consequence of the 2019 creation of the UW’s School of Computer, Data & Information Sciences and its incredible popularity among students, five assistant professors — Yuhang Zhao, Corey Jackson, Yea-Seul Kim, Adam Rule, and Jacob Thebault-Spieker — are also researching human–computer interaction. Along with faculty at the UW Department of Industrial and Systems Engineering, which is doing similar work on campus, these researchers are developing solutions that enable computer-based technology to improve our daily lives.

Each of these new hires spearheads research as diverse as Mutlu’s, tackling everything from medical recordkeeping to household safety to self-driving cars. But one theme unites them — making computers and robots better so they can empower people.

“Now I Can Be a Superman!”

For data scientist Yea-Seul Kim, empowerment might be too mild a word.

“The accurate way of perceiving things has to be a human right,” says Kim, who is developing tools and algorithms to help low-vision people interact with data. “Everyone should be able to make decisions based on the same information. Companies use huge data in clever ways, but individuals have less expertise and fewer resources. My vision is to shrink that gap, so people can make decisions as if they had the techniques to analyze huge amounts of data.”

Kim, Mutlu, and Yuhang Zhao make up the UW Department of Computer Sciences’ Human–Computer Interaction Group. Kim and Zhao teamed up for a study in which they asked visually impaired people how to best replace online graphics with “alt text,” which describes the content of an image. There are nearly 300 million vision-impaired people, according to the World Health Organization, and for them, understanding visual data poses a daunting challenge.

“I want to imitate the way sighted people would skim the visualization,” one test subject told Kim and Zhao. Another interviewee offered a possible solution: combine alt text with an audio version of a graph whose pitch would rise and fall with levels on the graph. The duo’s paper ended up proposing that alt text be automatically created by a system that detects trends in charts’ visual data.

Zhao has another project on the front burner, literally. She, Mutlu, and optometrist Sanbrita Mondal, the head of Low Vision Services in the UW’s Department of Ophthalmology and Visual Sciences, are studying cooking habits of low-vision people.

“These patients can’t function in the kitchen,” says Mondal. “They’re afraid they’ll burn or cut themselves. They eat frozen dinners or reheatable meals, which affects their health.”

Zhao plans to outfit study participants with augmented-reality smart glasses souped up with thermal cameras, depth sensors, and audio alarms. The super spectacles will tell wearers about stove-related dangers using multimodal feedback — colors to depict hot spots, defined contours of objects, and sounds if a wearer’s hand strays close to a high-temperature area.

In 20 years, technology will have advanced to the point where people may buy companion robots as routinely as they now purchase laptops or smartphones.

No stranger to assisting people with disabilities, Zhao helped invent the Canetroller while interning at Microsoft. This high-tech “white cane” let visually impaired test subjects navigate a virtual-reality world in a lab. When users tapped into a virtual trash can, carpet, or table, it made warning noises, created vibrotactile feedback, and impeded contact with the object.

“Oh, now I can be a superman!” one trial user told her. The Canetroller may train low-vision people to use standard white canes in safe settings before venturing onto sidewalks, according to Zhao.

“Doing research with people with disabilities has been such a fantastic journey for me, though my background is pure computer science,” she says. “I’ve had many conversations with them to try to better understand their lives. It’s opened up my mind.”

Ending “Note Bloat”

Zhao’s work with patients has a counterpoint in the Information School (or iSchool) and its Collaborative Computing Group, which includes faculty members Adam Rule, Corey Jackson, and Jacob Thebault-Spieker. Rule wants to help stressed doctors. His field is cognitive ergonomics, especially medical informatics — how medical professionals use clinical notes to perform collaborative, data-driven work.

The epidemic of burnout among doctors has been intensified by “note bloat.” Physicians who once scribbled concise patient records now use tablets. U.S. doctors’ notes are four times longer than those of peers in other countries, according to Rule. “The highly trained American physician,” says the journal Annals of Internal Medicine, “has become a data-entry clerk, required to document not only diagnoses, physician orders, and patient visit notes, but increasingly low-value administrative data.”

“I want to develop tools to let doctors leverage the power of data and computation so they can focus more on patients rather than documenting patients,” Rule says. “We can build systems that let them access information in a central place and work more flexibly on the fly.”

Vast Disparities in Access

Kim develops tools and algorithms to help low-vision people interact with data. “The accurate way of perceiving things has to be a human right.”

Human–computer interactions level the playing field. That’s what the iSchool’s Corey Jackson learned during the summer of 2009. A political science major, he had been planning a career in international law when he went on a One Laptop per Child internship after his junior year to the island of São Tomé off West Africa’s coast. He found himself teamed with computer science professors and, ever since then, has focused on human–computer interaction.

Jackson found that children’s homes had no electricity. Classrooms had one outlet, if that. “It made me realize the vast disparities in access to basic infrastructure. The most rewarding part was seeing children’s faces light up when we did programming modules,” he says.

Today Jackson works to transform citizen science at websites like Galaxy Zoo and Zooniverse, where thousands of laypeople collaborate with astronomers on projects with titles like “Help Scientists Search for Gravity Waves — the Elusive Ripples of Spacetime.” No experience is needed to do this crowdsourced research, and the sites offer few guidelines. Newcomers get confused, so Jackson ensures that the technology works and is accessible to users.

“We need to make sure people from different socioeconomic or racial backgrounds can use data and technologies we develop to advance their goals,” he says.

Crisis of Confidence

The same disparity also hurts rural areas. The iSchool’s Jacob Thebault-Spieker, whose expertise is social computing, learned that in middle school when his family moved from Saint Paul, Minnesota, to tiny, impoverished Sebec, Maine.

He grew up a self-described “computer nerd,” but when he saw peers take plush jobs at tech companies, Thebault-Spieker says, “I had a crisis of confidence. Working for Google to make its search engine a few milliseconds faster is important, but it didn’t resonate with me. I started trying to figure out how to use my skills to solve social problems.”

His work focuses on understanding patterns of behavior — geographic or otherwise — that create biases and disparities in systems like OpenStreetMap, Wikipedia, and Uber. He is addressing poor coverage of rural areas by OpenStreetMap, which Tesla uses in its autonomous-vehicle mapping systems. For example, cattle grates deter cows from wandering off pastures but let vehicles pass. Because mappers in urban areas may not know what these grates are, incorrect labeling could cause car accidents.

“If we’re talking about Tesla being the future of self-driving vehicles,” Thebault-Spieker says, “that’s not happening in Sebec.”

“The Robot Doesn’t Judge Me”

While others employ technology to solve specific problems, Mutlu’s robot reading-buddy project explores a more intimate connection between people and machines.

The first incarnation of his study in 2017 used Minnie, a 13-inch-tall desktop robot named after Minerva, the Roman goddess of wisdom. She was placed in test families’ homes for two weeks. The goal? To study whether children — 10 boys and 14 girls ages 10 to 14 — would enjoy reading with her, if that relationship would flourish over time, and if the experience would make them more avid readers.

Minnie had big, black, inviting eyes that moved. To appear more human when speaking, she would look away as if lost in thought. To mimic fidgeting, her white, plastic, humanoid head made random movements. Her stationary body held a microphone and a camera that used facial recognition to locate the child.

Programmed with 25 books that ranged from Goosebumps to Harry Potter, Minnie listened to children read. She appeared thoughtful. She summarized what they said and urged them to do better.

“The biggest shock in our study was that two weeks later,” says Mutlu, “kids were still relating to the character rather than saying, ‘This is stupid. I’m not talking to your robot anymore.’ ” The number of children who thought Minnie had emotions quadrupled during the study. Children said Minnie motivated them to read. They thought she helped them understand and remember books better compared to responses given by robot-less children in a control group.

Home robots will one day be programmed to respond to each family member differently. They may say to a parent, "I can't talk to you now" or "Can I play with the child?"

Minnie became a friend. Children were glad she never criticized them. “I liked that the robot doesn’t judge me when I misspell,” one child said. Families sent Mutlu’s research partner photos of Minnie dolled up with clothes. Children made her feel comfy by cushioning her with pillows.

Mutlu is wary of creating machines humans may become too attached to. “Do I want people to fully assume a machine has emotions? I’m skeptical,” he says. “My goal is to make it more intuitive and natural for people to interact with these things. I don’t want to push the boundary of, like, ‘Oh, I want people to have deep relationships with them.’ ”

This past summer Minnie lost her job to Misty, a programmable consumer robot whose base model sells for $2,299. The 14-inch-tall white or black plastic Misty moves on treads. Her arms wave and point. Big, blue, cartoony eyes programmed with 24 emotions dominate her face screen.

Misty’s study gauges whether a robot reading buddy can boost children’s interest in science. Her emotions are calibrated to amuse children into maintaining a heightened interest in such things as mold and spiders. For example, if a scary spider is in a book, Minnie flings up her arms in dismay and retreats. Her eyes turn red, their shapes expressing fear.

Mutlu has young children himself and worries parents might use robots as electronic babysitters. He limits his children’s screen time, and the robot reading-buddy project appealed to him because of its solitary, educational nature.

“I don’t think we’re competing with parents or social interactions, but I completely agree that [unsupervised children using robots] is a risk and something we have to think about,” he says.

For Misty’s next challenge, Mutlu will send her to stay with families for nine months. “What is it going to mean for a social robot to live with a family that long? How is it going to affect family interactions?” he asks. “This is very complicated stuff. We are trying to design it with the best of our knowledge.”

In the future, he says, home robots will be programmed to respond to each family member differently. Such a robot might say to a parent, “I can’t talk to you now” or “Can I play with the child?”

“It will be a very different paradigm for interaction,” says Mutlu.

“Do More, Do Better”

Some fear a future in which killer machines trample humans — and human rights. Mutlu, who is Catholic, is confident robots and other forms of human–computer interaction are part of a greater — and better — plan.

“What are our friends and families for?” he asks. “They’re for helping each other get to heaven. So why not build these machines to help people do more, do better, and do what they’d like to accomplish?”

The question, says Mutlu, is: “Can I get these systems and technologies to support that mission?” •

Freelance writer George Spencer is based in Hillsborough, North Carolina, and is the former executive editor of the Dartmouth Alumni Magazine. He received his basic programming from his parents.

Published in the Winter 2021 issue

Comments

No comments posted yet.